Comments on the Consolidated Framework for Collaboration Research.

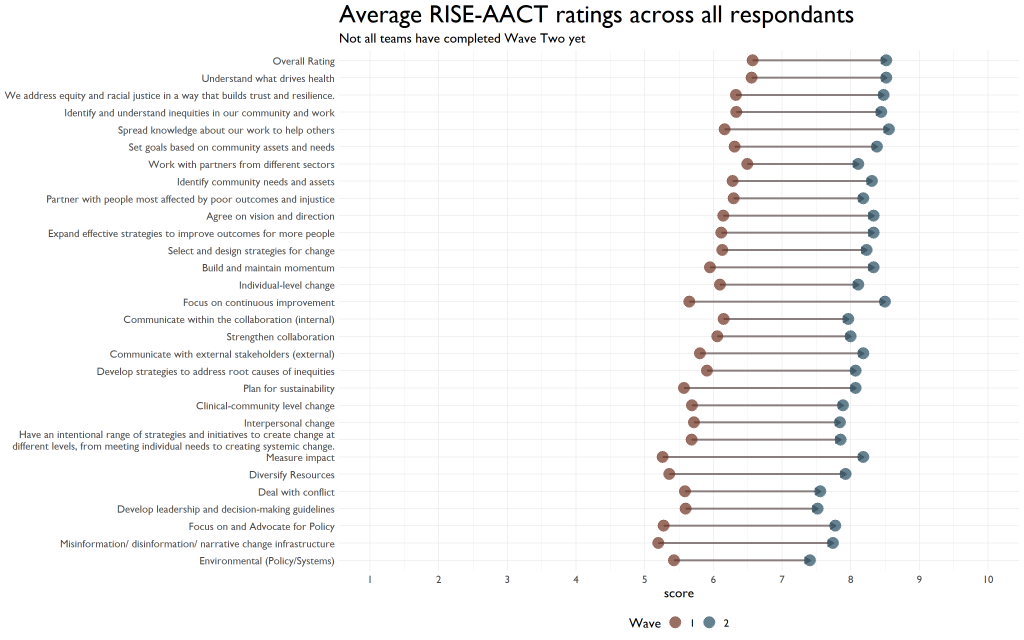

In the RISE project, our friend and colleague Jason Turi is coordinating our inquiry into how coalitions work with one another. One of the major sources of that data comes from the AACT, where were have a number of items that show how coalition functioning has grown over time.

We also have a fair amount of qualitative data that we’ll be coding using a study from two years ago.

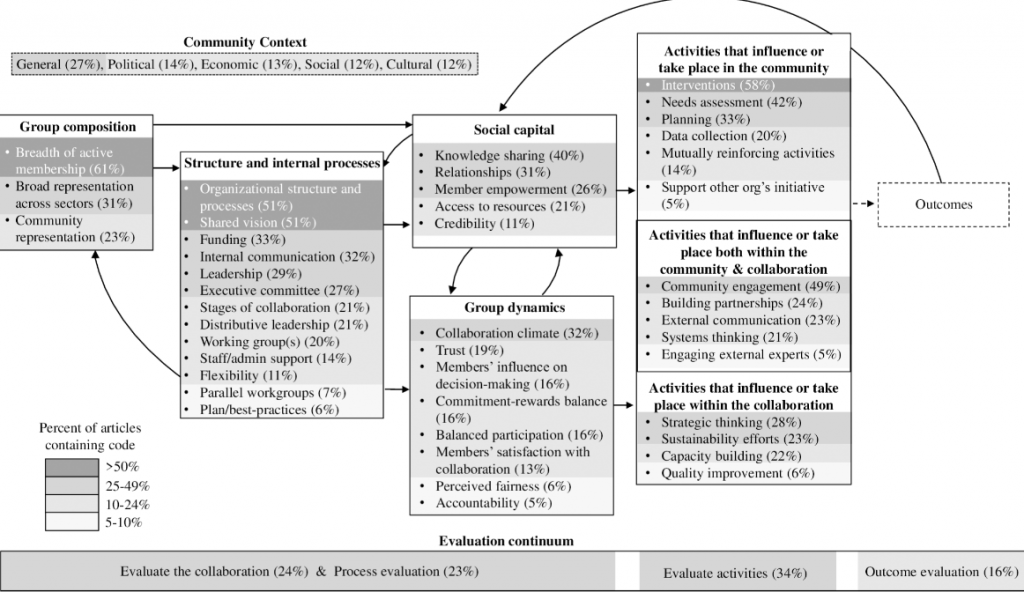

A Consolidated Framework for Collaboration Research from a systematic review of theories, models, frameworks and principles for cross-sector collaboration

I’m not going to type that title out again, but here is the link to the article . Like a lot of review articles, a huge amount of it is fluff. By that, I mean there is the standard intro that says, “a synthesis is needed because”, and “our methods can be found in PRISMA diagram”. Really, there are only two paragraphs and two figures that are worthwhile in the entire article.

But they are unbelievable figures.

This first figure shows the frequency that different constructs show up in five or more of the articles in the articles, clustered into general processes. This is extremely helpful in charting, at minimum, what people report as important in community coalitions.

Now, there is a clear reporting bias here. The authors are reported what the had the measurement infrastructure to capture these constructs. But still, there are some major surprises here. Only 6% of articles include mention of planning and best practices? That’s not good! Similiarly, there are very low numbers for capacity building and quality improvement. “Capacity building” is standard practice in any coalition or grant-funding initiative, and to not see it acknowledges is, frankly, bonkers.

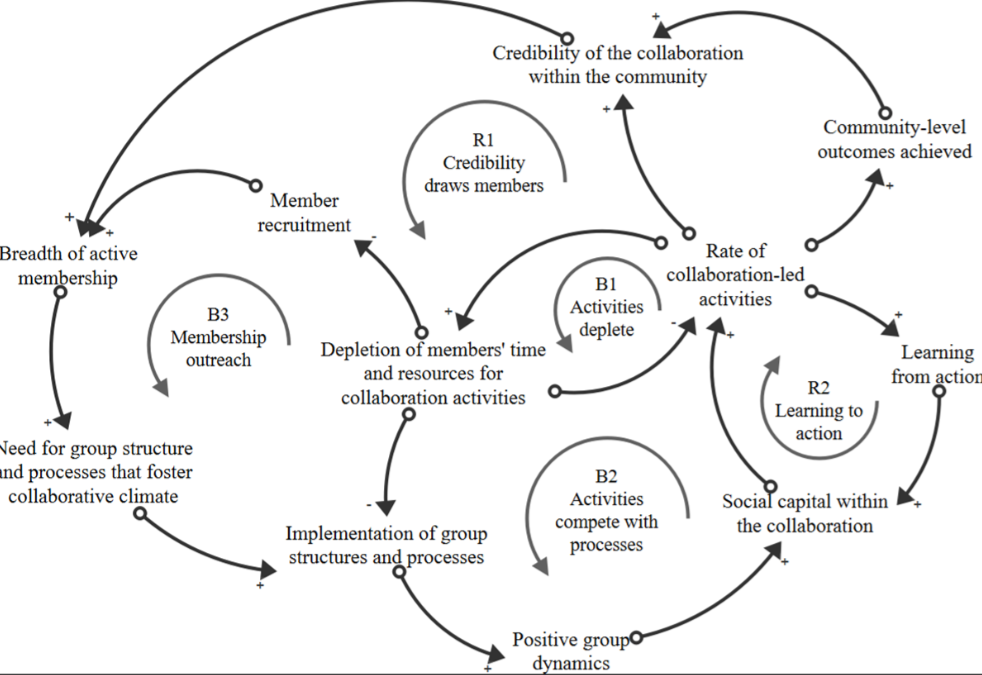

Relationships between constructs

To be clear, these are not the relationships that the authors found. These are hypothesized relationships between these constructs and what the feedback looks might be. When I see a diagram like that, I think qualitative comparative analysis because of its capacity to find necessary and sufficient factors. Depending on how we code the results from our qualitative interviews, that might be a strong possibility here.

I can’t say the article itself is worth your time, for the aforementioned reasons. But, if you focus in on the results, there are some gems that will hopefully help us more effectively assess coalition functioning going into year two.